- 83 Posts

- 466 Comments

151·7 months ago

151·7 months agoIf I cared I bet I could get the bot to pay me full retail price or more.

Introducing EEVDF

The “Earliest Eligible Virtual Deadline First” (EEVDF) scheduling algorithm is not new; it was described in this 1995 paper by Ion Stoica and Hussein Abdel-Wahab. Its name suggests something similar to the Earliest Deadline First algorithm used by the kernel’s deadline scheduler but, unlike that scheduler, EEVDF is not a realtime scheduler, so it works in different ways. Understanding EEVDF requires getting a handle on a few (relatively) simple concepts. …

… For each process, EEVDF calculates the difference between the time that process should have gotten and how much it actually got; that difference is called “lag”. A process with a positive lag value has not received its fair share and should be scheduled sooner than one with a negative lag value.

In fact, a process is deemed to be “eligible” if — and only if — its calculated lag is greater than or equal to zero; any process with a negative lag will not be eligible to run. For any ineligible process, there will be a time in the future where the time it is entitled to catches up to the time it has actually gotten and it will become eligible again; that time is deemed the “eligible time”.

The calculation of lag is, thus, a key part of the EEVDF scheduler, and much of the patch set is dedicated to finding this value correctly. Even in the absence of the full EEVDF algorithm, a process’s lag can be used to place it fairly in the run queue; processes with higher lag should be run first in an attempt to even out lag values across the system.

End of my little TL;DR

So basically ‘Even more + CFS’.

I want the opposite in a scheduler, I want bottleneck optimisation for AI and CAD.

I also wish there was an easy way to discover if my 12th gen i7 has AVX512 instructions fused off or just missing from microcode (early 12th gen had the instruction, but it was unofficial and discovered by motherboard makers. Intel later fused it off entirely according to some articles. Allegedly, all it took was running the microcode from the enterprise P-core which is identical on the consumer stuff.) I would love to have the option to set a CPUset affinity and isolation for llama.cpp (that automatically detects if the AVX ISA is available), and compare the inference speed with my present strategy.

My understanding is that the primary bottleneck in tensor table math is the L2 to L1 cache bus, but I’m basically just parroting that info on the edge of my mental understanding.

2·7 months ago

2·7 months agoRunning Textgen on Fedora WS 38 distrobox container. I have an old version of Textgen from a month+ back. When I let the Fedora kernel update and build it broke the old version of Textgen. I tried to get the latest version of Textgen, and it works with the updated kernel 6.6 in a new container, but the changes that the project has made have ruined it for me and I have no interest in continuing to run their version. I want to keep the old version. I rolled back the host kernel to 6.5 and the old Textgen works fine.

I swear I saw something about a runtime loaded version of the Nvidia kernel module, but IIRC that requires the UEFI keys to self sign it.

I tried loading my own keys into the bootloader already but they get reject at the last step and the TPM chip overrides them. I have never tried to boot into the UEFI system with KeyTool. I’m afraid that will be the only way. I was hoping someone here might know the path a little better than my current fog-of-war like state.

(I have a bunch of custom scripts and mods that the Textgen project broke in one of their major changes.)

67·7 months ago

67·7 months agoWe should put people like this in charge of the TSA, just like digital crimes often result in government jobs. The guy has proven he knows more than most. Determine his connections and intentions, surveil him, and give the guy a career. He is smart enough to know all the ineffective BS and has the credentials to prove it.

5·7 months ago

5·7 months agoI’m no expert. Have you looked at the processors that are used and the RAM listed in the OpenWRT table? That will tell you the real details if you look it up. Then you can git clone OpenWRT, and use the gource utility to see what kind of recent dev activity has been happening in the source code.

I know, it’s a bunch of footwork. But really, you’re not buying brands and models. You’re buying one of a couple dozen processors that have had various peripherals added. The radios are just integrated PCI bus cards. A lot of options sold still come with 15+ year old processors.

The last time I looked (a few months ago) the Asus stuff seemed interesting for a router. However, for the price, maybe go this route: https://piped.video/watch?v=uAxe2pAUY50

17·7 months ago

17·7 months agoIt is the mission to make sure Osama bin Laden’s long game was successful. He destroyed democracy and freedom exactly like he wanted. Now we have Republican Jihads. He won.

42·7 months ago

42·7 months agoAnd bow to your feudal overlord. Your citizenship was sold to him by your politicians you didn’t care to vote against and now you are a serf that owns nothing.

All billionaires deserve a French revolution’s reward.

4317·7 months ago

4317·7 months agoNothing good comes from criminal billionaires like Altman or Gates.

7·7 months ago

7·7 months agoIrrelevant! Your car is uploading you!

4·7 months ago

4·7 months agoHow many times do you think the same data appears after a model has as many datasets as OpenAI is using now? Even unintentionally, there will be some inevitable overlap. I expect something like data related to OpenAI researchers to reoccur many times. If nothing else, overlap in redundancy found in foreign languages could cause overtraining. Most data is likely machine curated at best.

14·7 months ago

14·7 months agoI bet these are instances of over training where the data has been input too many times and the phrases stick.

Models can do some really obscure behavior after overtraining. Like I have one model that has been heavily trained on some roleplaying scenarios that will full on convince the user there is an entire hidden system context with amazing persistence of bot names and story line props. It can totally override system context in very unusual ways too.

I’ve seen models that almost always error into The Great Gatsby too.

1812·7 months ago

1812·7 months agoReligion is comic con with fans that kill each other regularly.

Label every instance of hate speech, and fantasy magic as primitive nonsense in a way that is obvious to the dumbest of people.

Like, “everyone in this era was illiterate and uneducated so 5k people present was 5k idiots” “drug use was prevalent” “mental health issues like schizophrenia and seizures were demon position because of ignorance and were used as parlor tricks to convince idiots con artists were magic” “religion was not separate from state in ancient times and the political struggles and propaganda are obvious”

There are so many aspects that people only believe because it was taught as toddlers when everyone is a gullible idiot. The vast majority of people only follow it because of the social network isolation and inability to connect with people in an open and trusting way outside of this context. Fighting the symptoms of religion is nonsense. Educate to remove the duality of “magic is real in religion” and create more physical community connectivity to break down entrenched social network isolation.

2·7 months ago

2·7 months agoI don’t think so. The issue has been ongoing for the last couple of months. I thought it was a software problem at first because I started seeing lots of dropped connection issues while streaming that have seemed to get worse over time.

I still have my doubts that the chip is failing because it can work fine for minutes to hours at a time then suddenly cuts out completely for random lengths of time.

71·7 months ago

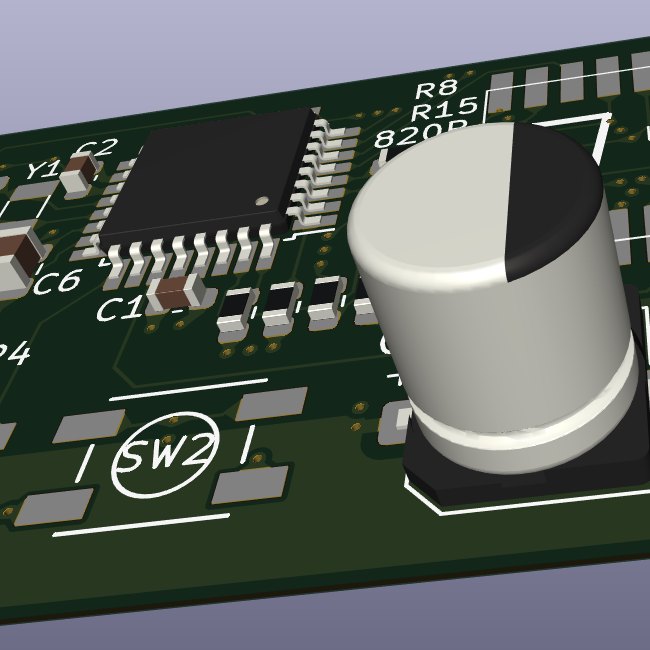

71·7 months agoTake apart any old switch mode power brick, rip off the transformer and unwind the enameled copper wire. Use an Xacto knife to carefully and completely remove the enamel from the entire circumference and test if it wets with solder in 360° before adding it as a replacement.

For my own etched designs that need mechanically solid through holes and for repairs/mods where I need to make a soldering contact, I keep a set of brass micro rivets. The smallest ones I have are 0.9mm and work great with a 1mm hole in the PCB. If you’ve never seen or tried this, no special tools are needed. Place the rivet in the hole and use a pointy object like a dental pick to expand the back side.

2·7 months ago

2·7 months agoNot mine, used Moe’s litterbox not knowing how long it might last as a temp image of OP image as BG for GNOME. Turns out that temp hosting option is probably rate limited…no big deal. It wasn’t a forever internet pic anyways.

420·7 months ago

420·7 months agodeleted by creator

deleted by creator